William Moses

Massachusetts Institute of Technology

As a student at a Virginia science and technology-oriented high school, William Moses got research experience at the Naval Research Laboratory, working on wireless communication spectra and data transfer and earning a government security clearance.

But Moses knew his calling was creating software tools that make it easier for computer science novices to write streamlined code like experts.

“I think that’s the most interesting place in this (high-performance computing) landscape to be,” says Moses, a computer science doctoral student at the Massachusetts Institute of Technology and a Department of Energy Computational Science Graduate Fellowship (DOE CSGF) recipient. “It’s about creating abstractions” of programming models “that allow people to do things that they would otherwise be unable to do.”

Moses’ milieu is compilers, software that converts code into executable computer instructions. Compilers also can optimize programs to boost efficiency. For example, they might convert code from a serial, step-by-step approach to a parallel format that divides a problem among multiple processors, simultaneously solving each to more quickly reach an overall answer.

As an undergraduate studying with Charles Leiserson of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), Moses took on a quirk in LLVM, an HPC compiler technology. Leiserson is now his doctoral advisor.

“Compilers didn’t have a good representation of parallel programs,” Moses says. If a researcher introduced a parallel loop to use a multicore processor, for instance, the code often ran slower. Moses, with Leiserson and MIT research scientist Tao Schardl, devised an approach that let LLVM use its understanding of serial code to analyze parallel programming and apply optimization. The approach, called Tapir, modified or added only about 6,000 lines among LLVM’s 4-million-line code.

Tapir won a best paper award at the 2017 Symposium on Principles and Practice of Parallel Programming. Since then Moses has added parallel-specific abilities, and researchers have adopted the concept for other parallel compiler frameworks.

More recently, Moses tackled a problem relating to machine learning (ML) and automatic differentiation (AD), in which code robotically calculates derivatives, numbers representing a function’s rate of change relative to independent variables. (Velocity is the derivative of a moving object’s position with respect to time.)

Differentiation is common in scientific codes and key to optimizing particular ML approaches. But researchers who use popular languages such as C++ often must rewrite their AD code in ML-specific frameworks such as PyTorch or TensorFlow. “You’d have to rewrite your entire codebase” and other software that relies on it, Moses says, wasting time working with a new language instead of improving the actual simulation. That limits ML’s use in such things as climate models.

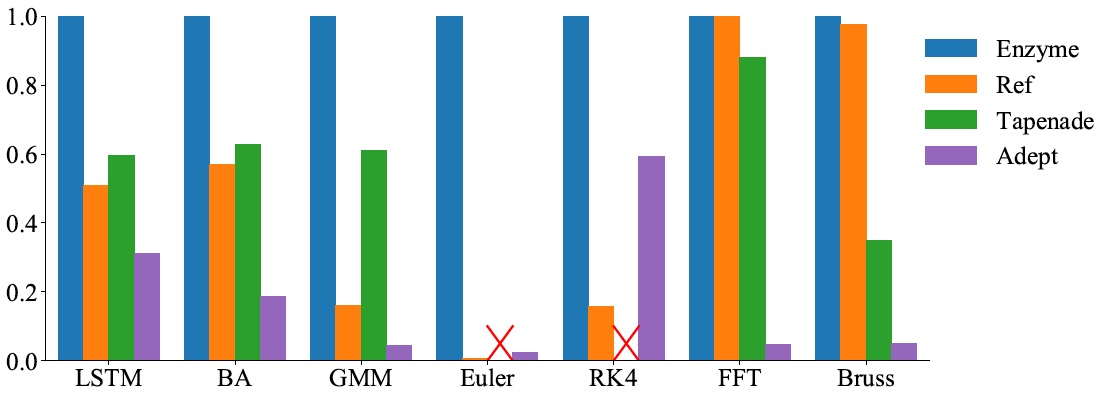

Moses and fellow graduate student Valentin Churavy developed Enzyme, an LLVM plug-in that lets developers automatically differentiate code from a range of languages. That means programs also can be optimized before executing AD, boosting speed by as much as four times. The team presented the project in December 2020 at the Conference on Neural Information Processing Systems. CSAIL also promoted it in a news release.

In a practicum at Argonne National Laboratory (conducted virtually) in early 2020, Moses, Churavy and collaborators extended Enzyme for use with graphics processing units (GPUs), accelerators found in most of today’s supercomputers. In one test, the team’s solution enabled code optimizations that boosted performance by orders of magnitude.

For his 2019 Lawrence Berkeley National Laboratory practicum, Moses worked with Bert de Jong to research quantum computers. These machines use physics that govern particles at matter’s tiniest scales and are expected to be hugely faster than classical machines, but the quantum bits that perform calculations are error-prone, making some quantum algorithms impractical.

Quantum codes compile in processes similar to those in classical computers. Researchers must understand how the quantum hardware will behave to map code to it. Moses used data from previous quantum computing runs to create error models, aiming to create a system that automatically builds them. Characterizing error rates could help predict how well a quantum computing circuit will run, letting future programs capitalize on the machine’s underlying behavior.

Moses says the practicum let him apply machine-learning skills to a new domain and let him spend time as a tool user. “If you want to be the best maker of tools, you need to understand fundamentally what all the problems are inside of that space. Plus it’s a good way to learn.”

Moses is unsure of his post-graduation plans, but he wants to keep working on problems that continue to push the boundary of letting non-experts apply expert programming techniques.

Image caption: Relative speedup of the Enzyme LLVM plugin as compared with historical automatic differentiation approaches running a suite of benchmarking programs. Higher is better. A red X indicates a system that is incompatible with the benchmark. Credit: Moses, William S. and Churavy, Valentin: “Instead of Rewriting Foreign Code for Machine Learning, Automatically Synthesize Fast Gradients.” Advances in Neural Information Processing Systems 33 (2020).