Now on ASCR Discovery: Tackling a Trillion

To study the development of the universe or plasma physics, scientists run into what could be the biggest big-data problem: simulations based on trillions of particles. With every step forward in time, the computer model pumps out tens of terabytes of data.

To run and analyze these huge calculations, a team of scientists has developed BD-CATS, a program to run trillion-particle problems from start to finish on the most powerful supercomputers in the United States.

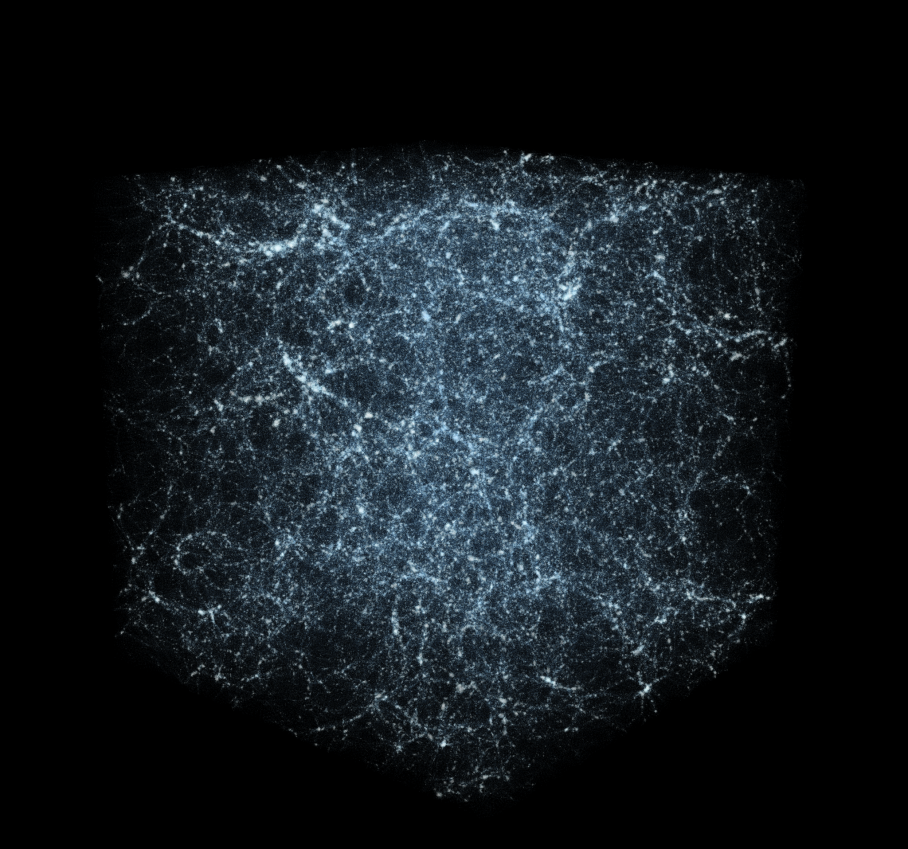

Each particle in a cosmology simulation, for example, represents a quantity of dark matter. As the model progresses through time, dense dark matter regions exert a gravitational pull on surrounding matter, pulling more of it into the dense region and forming clumps.

As Prabhat – group leader for Data and Analytics Services at the Department of Energy’s National Energy Research Scientific Computing Center (NERSC) – says, “If we got the physics right, we start seeing structures in the universe, like clusters of galaxies which form in the densest regions of dark matter. The more particles that you add, the more accurate the results are.”

Prabhat is part of a team presenting trillion-particle simulation results Tuesday, Nov. 17, at the SC15 supercomputing conference in Austin, Texas.

Science is rife with simulations that generate large data sets, including climate, groundwater movement and molecular dynamics, says Suren Byna, a computer scientist in the Scientific Data Management group at Lawrence Berkeley National Laboratory (LBNL). These problems might require tracking only billions of particles today, but data analytics that work at the leading edge – as in cosmology and plasma physics – will improve future simulations across many domains.

The system that made this possible, BD-CATS, emerged from a collaboration between three other projects.

First, MANTISSA “formulates and implements highly scalable algorithms for big-data analytics,” Prabhat says. Scalable algorithms generally run faster as the number of processors they’re on increases. Clustering, which sorts data into related groups, is a major challenge for the kind of large data sets MANTISSA faces.

Second, for fast data input and output (I/O), BD-CATS uses Byna’s work on ExaHDF5, a version of hierarchical data format version 5 (HDF5), a file format to organize and store large amounts of information. ExaHDF5 efficiently scales the original program, making it run well even for files that are tens of terabytes in size.

Third is Intel Labs’ project to parallelize and optimize big-data analytics, making them run efficiently on a large number of computer processors. Md. Mostofa Ali Patwary, a research scientist in Intel’s Parallel Computing Lab, addressed clustering with a scalable implementation of DBSCAN, a longstanding algorithm designed for that purpose. Patwary and his colleagues balanced the analysis across supercomputer nodes to reduce time spent on communication.

As Patwary explains, the projects combine to create an end-to-end workflow for trillion-particle simulations. BD-CATS can load the data from parallel file systems (which spread data across multiple storage sites), create data structures on individual computer nodes, perform clustering and store results.

Read more at ASCR Discovery, a website highlighting research supported by the Department of Energy’s Advanced Scientific Computing Research program.

Image caption: This simulation of the universe was done with NyX, a large-scale code for massively parallel machines. Courtesy of Prabhat and Burlen Loring, LBNL.